AI Tokens: An Essential Guide for Prompt Engineering Beginners

- By Stephen Smith

- In Blog

- 0 comment

You’ve probably seen the word “tokens” thrown around a lot when reading about large language models (LLMs) like ChatGPT. But what exactly are tokens, and why do they matter when it comes to AI? Let’s break it down into simple terms.

So What Are Tokens?

Tokens are the basic building blocks of text used by large language models (LLMs) like ChatGPT, GPT-3, and others. You can think of tokens as the “letters” that make up the “words” and “sentences” that AI systems use to communicate.

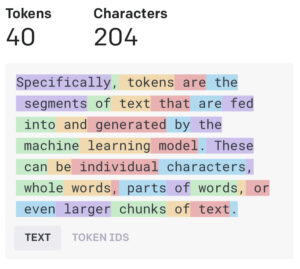

Specifically, tokens are the segments of text that are fed into and generated by the machine learning model. These can be individual characters, whole words, parts of words, or even larger chunks of text. For example, the two sentences you literally just read contain 34 words, which is 40 tokens. A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

The process of breaking text down into tokens is called tokenization. This allows the AI to analyze and “digest” human language into a form it can understand. Tokens become the data used to train, improve, and run the AI systems.

The above images were made using OpenAI’s Tokenizer, you can find it here: OpenAI Platform

I would recommend you testing it out for yourself! It’s a great tool.

Want to improve your prompting game?Check our free Advanced Prompt Engineering Course here.

Why Do Tokens Matter?

There are two main reasons tokens are important to understand:

- Token Limits: All LLMs have a maximum number of tokens they can handle per input or response. This limit ranges from a few thousand for smaller models up to tens of thousands for large commercial ones. Exceeding the token limit can lead to errors, confusion, and poor quality responses from the AI. Think of it like a friend with limited short-term memory. You have to stay within what they can absorb or they’ll get overloaded and lose track of the conversation. Token limits operate the same way for AI bots.

- Cost: Companies like Anthropic, Alphabet, and Microsoft charge based on token usage when people access their AI services. Typically pricing is per 1000 tokens. So the more tokens fed into the system, the higher the cost to generate responses. Token limits help control expenses.

- Message caps: most chatbots such as ChatGPT and Claude, even with premier subscriptions will limit the number of messages you can send per day. By optimising and being mindful of the size of your prompts, you can get more out of your subscription!

Strategies for Managing Tokens

Because tokens are central to how LLMs work, it’s important to learn strategies to make the most of them:

- Keep prompts concise and focused on a single topic or question. Don’t overload the AI with tangents.

- Break long conversations into shorter exchanges before hitting token limits.

- Avoid huge blocks of text. Summarize previous parts of a chat before moving on.

- Use a tokenizer tool to count tokens and estimate costs.

- Experiment with different wording to express ideas in fewer tokens.

- For complex requests, try a step-by-step approach vs. cramming everything into one prompt.

While tokens and tokenization may seem complex at first glance, the core ideas are relatively simple. Tokens enable AI bots to converse in human language. Understanding how they work helps avoid common pitfalls and improves your experience. With practice, prompt engineering with tokens becomes second nature.

So the next time you hear “tokens” mentioned alongside ChatGPT or other hot AI trends, you’ll know exactly what it means and why it matters. The token system forms the foundation for translating human communication into machine logic. As AI advances, so too will its ability to generate rich information from limited input tokens.

You may also like

Unraveling LLMs: Can AI Really Debug and Guard Your Code?

- 30 August 2025

- by Stephen Smith

- in Blog